Lxcfs资源视图隔离

1. 资源视图隔离

/proc/cpuinfo

/proc/diskstats

/proc/meminfo

/proc/stat

/proc/swaps

/proc/uptime2. Lxcfs简介

#/usr/local/bin/lxcfs -h

Usage:

lxcfs [-f|-d] -u -l -n [-p pidfile] mountpoint

-f running foreground by default; -d enable debug output

-l use loadavg

-u no swap

Default pidfile is /run/lxcfs.pid

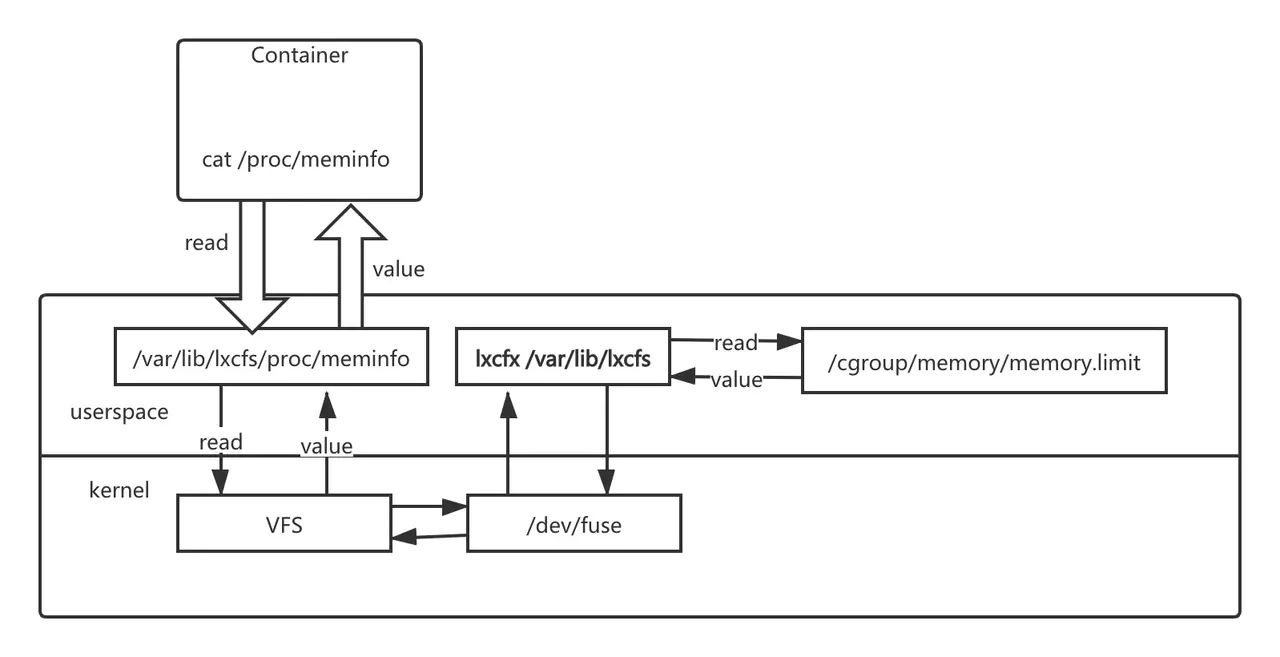

lxcfs -h3. Lxcfs原理

类别

容器内目录

宿主机lxcfs目录

4. 使用方式

4.1. 安装lxcfs

4.2. 运行lxcfs

4.3. 挂载容器内/proc下的文件目录

/proc下的文件目录4.4. 验证容器内CPU和内存

5. 使用k8s集群部署

5.1. lxcfs-image

5.2. daemonset

最后更新于